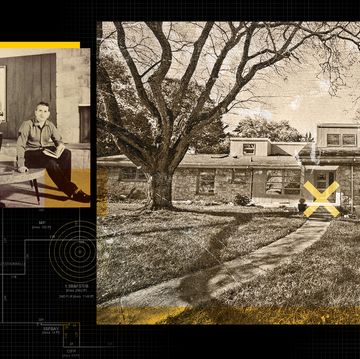

It was a chilly afternoon in July 2010, and Stephen Farrell, a computer scientist from Trinity College Dublin, found himself surrounded by reindeer. Thousands of reindeer. Farrell had just arrived at Skuolla, a Swedish village just north of the Arctic circle. Tonight, his hosts, a group of quasi-nomadic Sami reindeer herders, would begin to mark their calves, checking for specific cuts in the ears of reindeer mothers and then slicing the same pattern into her offspring.

After an evening of watching the spectacle, Farrell retired to his teepee for the night to rest up for the long day ahead of him. The reindeer herders would only be in Skuolla for a week and before they dispersed with their herds, and Farrell and his team of graduate students had work to do.

The reason for Farrell's Arctic sojourn had little to do with the notches in reindeer ears. It was about bringing the internet to the end of the world. When the herders dispersed at the end of the week, Farrell and his team would follow them to three different villages situated throughout Padjelanta National Park, all of them at least 12 miles from any sort of power or networking infrastructure. Before the calf-marking meetup, the students set up solar-powered Wi-Fi hotspots and other networking elements in each of the villages. Over the course of following eight weeks in July and August, Farrell and his team traversed the Arctic tundra in a helicopter, acting as data mules, physically ferrying data from the local village networks back to the internet to give the Sami people access to (highly delayed) email. The goal was to test an experimental data transfer procedure known as the Bundle Protocol, designed for use in extreme environments where the constant connectivity needed for regular internet access isn't available.

For the Sami reindeer herders, Farrell and his colleagues' work meant the ability to communicate with their loved ones via email—and fewer helicopter rides back to the grid to check their bank accounts. But for Farrell and a host of others, connecting this isolated population of Arctic herders to the web was about something much bigger: A 250-million-mile wide network which will span the solar system and help to dispel the sense of total isolation that will surely be experienced by the first Martians. The interplanetary internet.

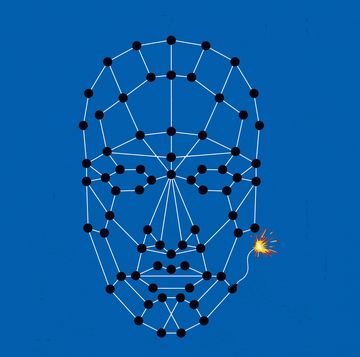

Network of Nodes

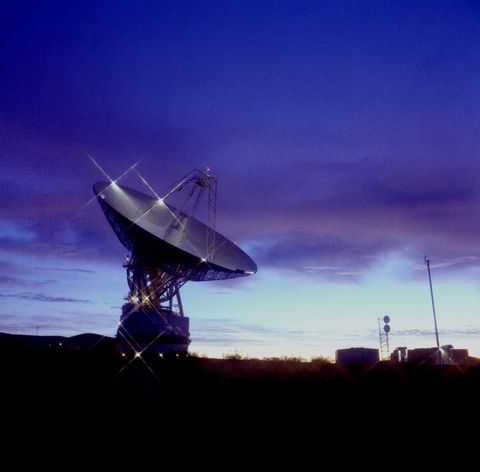

Ever since the first American spacecraft went orbital in 1958, NASA's craft have communicated by radio with mission control on Earth using a group of large antennas known as the Deep Space Network. For a few lonely probes talking to the home planet, that worked fine. In the decades since then, as NASA and other space agencies have accumulated dozens of satellites, probes, and rovers on or around other planets and moons, the Deep Space Network has become increasingly noisy. It now negotiates complex scheduling protocols to communicate with more than 100 spacecraft.

Most rovers (both lunar and Martian) talk to the Deep Space Network in one of two ways: by sending data directly from the rover to Earth or by sending data from the rover to an orbiter, which then relays the data to Earth. Although the latter method is wildly more energy efficient because the orbiters have larger solar arrays and antennas, it can still be error-prone, slow, and expensive to maintain.

The future of space travel demands better communication. The pokey pace at which our current Martian spacecraft exchange data with Earth just isn't enough for future inhabitants who want to talk to their loved ones back home or spend a Saturday binge-watching Netflix. So NASA engineers have begun planning ways to build a better network. The idea is an interplanetary internet in which orbiters and satellites can talk to one another rather than solely relying on a direct link with the Deep Space Network, and scientific data can be transferred back to Earth with vastly improved efficiency and accuracy. In this way, space internet would also enable scientific missions that would be impossible with current communications tech.

"We have to convince the scientists that they should feel free to plan missions that are not supported by point-to-point radio links," says Vint Cerf, Google's Chief Internet Evangelist. "We can increase the ambition factor dramatically by providing them with a rich and adaptive communication system."

The question is, how do you make the internet work in an environment as sprawling and hostile as the solar system?

Conceptually, at least, the interplanetary internet is similar to the terrestrial internet you're using to read this. It's a network of nodes passing packets of information back and forth. Yet unlike the terrestrial internet, this network of networks is linked together across the millions of miles, where local networks are made of spacecraft on or around other planets. The backbone could be made of old scientific orbiters repurposed to act as data relay links, connecting each of the localized planetary internets with one another. The end result? An internetwork that spans the solar system—in theory, anyway.

The idea of interplanetary networking (IPN) seems so simple and elegant that it almost sounds mundane. Yet taking cyberspace into deep space involves a host of challenges the engineers working on the terrestrial internet would never encounter.

Let's start with the basic issue of connectivity. On Earth, the backbone of the web is the more than 500,000 miles of fiber-optic cable crisscrossing the ocean floor and connecting fixed hubs, allowing for the seamless end-to-end connectivity that is one of the core architectural principles of the internet. While this works great for our blue marble, there's no way we're running fiber-optic cable to Mars. Instead, building a space internet means dealing with the vast distances and spotty connectivity inherent in anything interplanetary.

When the spans between data relay satellites are measured in millions of miles, delays can last up to several hours. For instance, the signal delay between Mars and Earth can range from 3 to 20 minutes depending on the position of the planets. As nerve-wracking as waiting 20 minutes for a message from Mars might seem, it probably sounded pretty good to the mission scientists working on the New Horizons Pluto flyby last July, where the latency between Earth and the spacecraft spanned about four and a half hours. Such massive delays between hitting send and receiving a reply are totally foreign to the connected citizens of Earth, who get frustrated when an instant message isn't answered instantly. Here on the home planet, we also don't have to deal with disruptive events such as solar flares and planetary transits—when a planet moves in front of a satellite or node and blocks the signal.

Having to work across such unfathomable distances with such unfavorable conditions means reinventing how the internet works. That's why NASA has gone back to the beginning, to the man who invented the internet as we know it in the first place.

Light Speed Is Not Enough

Cerf is now Google's Chief Internet Evangelist, but he is perhaps better known by his more illustrious title of "Father of the Internet." In 1998—when Cerf met up with NASA JPL senior scientist Adrian Hooke to discuss the art and science of interplanetary networking—he had long since made a name for himself by developing Transmission Control Protocol (TCP) and Internet Protocol (IP), some of the standards that make the web what it is today. But as Cerf and Hooke quickly realized, what works for the web may not work in the void.

Whether you're watching 30 Rock reruns or sending an email over the internet, you are moving through a stack of protocols that allow you to send data from point A to point B. The process begins with application protocols that vary depending on the program. There's HTTP for browsing the internet, FTP for file transfers, or SMTP for email. Those application protocols talk to the TCP layer, which is responsible for turning your data into bundles and arranging them in the correct order. The TCP layer then talks to the IP layer, which marks the data bundles with the address of the computer sending the data and the address of the computer receiving them. Finally, these addressed data bundles make their way to the network protocol layer, which handles the way data is actually physically sent between computers (fiber-optic cables or Wi-Fi).

TCP/IP works great for terrestrial internetworking. It forms the spine of today's internet, and it's built with the idea that connection disruptions are the exception rather than the rule. But for the problems of taking the internet to space—disruption, delay, and noise—Cerf's terrestrial internet protocols simply wouldn't cut it.

"When I met the JPL team for the first time we were finishing each other's sentences within the first 30 seconds," Cerf said. "It was very stimulating and exciting—we instantly bonded and quickly realized interplanetary networking wasn't just a matter of running TCP/IP between the planets. The speed of light was too slow, and we didn't know how to fix that."

Because outer space forbids constant connectivity, Cerf and the others homed in on the idea of a "store and forward" approach to routing, in which data hops from one node to the next and is stored there until a path to the next node opens up. Think of it as a long flight with several layovers—the data is the passenger, hanging out at the connecting airport until its flight can leave for the next node.

In 1998, DARPA began funding a small group of scientists at NASA's Jet Propulsion Laboratory (JPL) who were tasked with studying how store-and-forward data transfer might work in space. To overcome TCP's shortcomings in space, Cerf, Hooke, and their JPL colleagues developed the Bundle Protocol (BP), which has become the a go-to term for what are more formally called Delay Tolerant Networks, or DTN, the kind of thing you'd need for internet in space.

At its core, BP is like any other store-and-forward approach to data transmission. Essentially, it ties together lower-layer internet protocols (such as transport, link, and physical) so data transmissions happen in self-contained "bundles." The bundles are forwarded from node to node, stored at each until a connection allows the bundle to be passed to toward it destination. In a sense, this is similar to what Farrell and his team were doing in the Arctic by taking packets of information and physically moving them between the reindeer herding villages. Only this time it's on a cosmic scale, where the villages are satellites, rovers, and Martian colonies; the helicopters are radio waves and lasers; and reindeer are nowhere to be found.

The Lego Test

The best thing about Bundle Protocol is that it works. We know because it's already taken the internet off the planet.

In 2008, a U.K. satellite was the first to use BP, sending a large image file to a ground station. Following that trial run, BP was uploaded later that year to NASA's revamped Deep Impact spacecraft (originally meant to study comets by slamming into them), making it the first deep space node for the interplanetary internet.

In early 2009, the first International Space Station experiment to use these protocols went live, moving extraterrestrial internet from pure experiment into an actual working technology. DTN software was uploaded to two testing units on the ISS, which tested how the bundle protocols would hold up to transmission delays of several minutes without any feedback. Kevin Gifford, who outfitted the testing units with DTN capabilities while working as a senior aerospace researcher at the University of Colorado, says once engineers realized how much more science they could get from the space station this way, DTN quickly became the standard.

"With DTN, we were able to get a thousand times improved data downlink because of the efficiency of disruption tolerant networking," he says. "So it quickly went into operational mode and it's been that way ever since. We proved a point there."

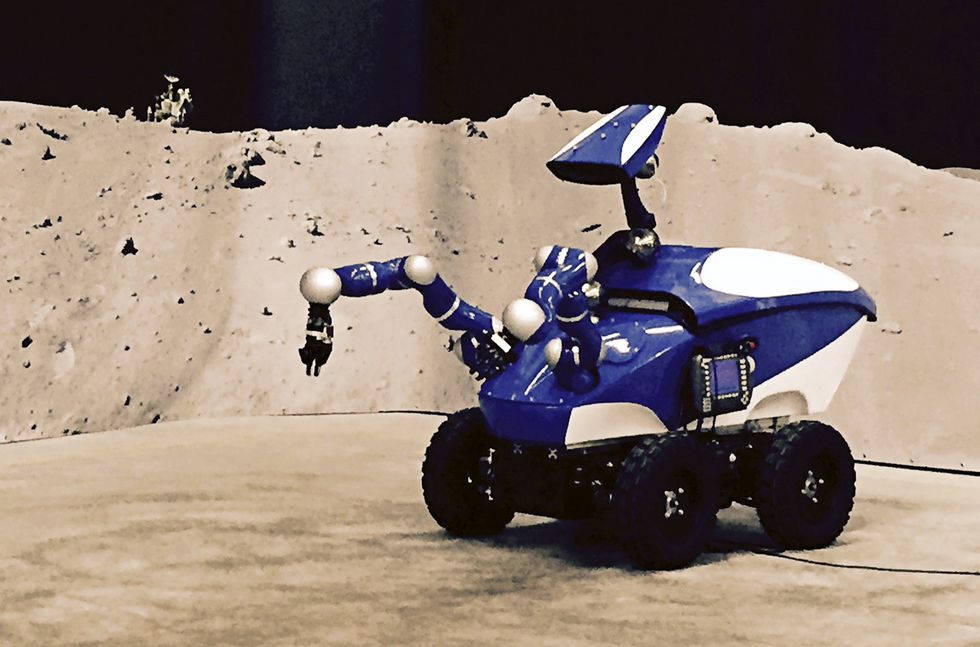

In 2012, NASA and the European Space Agency showed they could use a network driven by DTN even to drive a rover. One made of Legos, anyway. Astronauts on the ISS commandeered the plastic robot over mock planetary landscape in Germany, demonstrating the feasibility of future astronauts in orbit around Mars using DTN to operate rovers on the planet's surface.

To show that Bundle Protocol is for more than just toy cars, the European Space Agency designed an experiment to emulate a scenario in which astronauts in orbit around Mars commandeer a rover on the planet's surface. Last September, ESA and JAXA astronauts completed a 10-day proof-of-concept mission in which they drove a 2000-pound rover located in Germany from the ISS.

Getting a Foot in the Hatch

To get the interplanetary internet going, you need to create a backbone of long-haul links that would act as data relays between planetary bodies. Think of them as the primary nodes that connect those varying networks of rovers and orbiters into a network spanning the solar system.

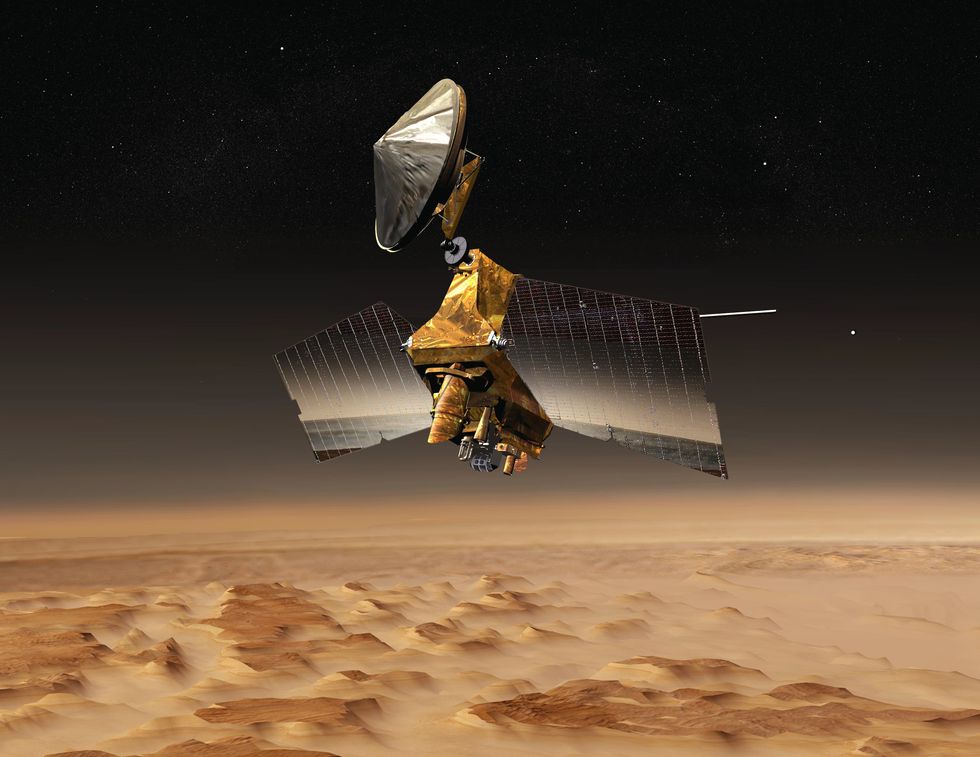

The first serious proposal for a permanent node was the Mars Telecommunications Orbiter (MTO), meant to act as a deep space relay link between rovers on the Red Planet and mission control on Earth, working at extremely high data rates thanks to its pioneering use of lasers (rather than radio waves) for data transmission. The MTO was scheduled to launch in 2009 and arrive in orbit around the Red Planet in 2010, but a budget crunch killed the program in 2005. Other proposals for building the interplanetary internet haven't been lacking; some of the slack from the axed MTO could be picked up by the planned Mars 2022 telecommunications satellite.

Yet the pieces of an actual interplanetary internet has been slow in coming, and the reasons why are familiar to anybody versed in NASA bureaucracy: funding problems and risk aversion.

Gifford, Cerf, and Farrell will tell you: For those at NASA and other space agencies, advanced communication concepts such as the interplanetary network are not a priority when it comes to mission design. That might sound odd, given the technology's potential to overhaul communication in space and open up new kinds of projects. But consider the problem from the point of view of a mission director guiding the design of a new space mission. When mission directors are working on a high-risk project on a relatively tight budget, they don't want to try out experimental communication methods like DTN, no matter how important such networking architecture might be in the long run.

"People who are in charge of mission design are incredibly conservative," says Keith Scott, the director of Space Internetworking Services at CCSDS. "It's frustrating, but I totally get it. If you get put in charge of mission design and development it's probably going to be the one shot you get in your career to do that. You're really highly motivated to not do anything to endanger it."

NASA doesn't exactly have the money to build these space internet nodes, though. So, like it did when it came time to replace the space shuttle, the agency has turned to private industry. In 2014 it called for companies to submit proposals in which they "explore new business models for how NASA might sustain Mars relay infrastructure," but so far no other information has been released indicating that NASA will pursue commercial solutions to relaying data from the Red Planet.

Talking By Laser

While plans to kick-start the space internet stall out—or are just getting going—the systems we use to talk to our spacecraft aren't getting any younger. As we noted earlier, most communications from missions like the Spirit and Opportunity rovers get relayed back to Earth by piggybacking on scientific orbiters like the Mars Reconnaissance Orbiter and Mars Express. While these missions have proven remarkably effectively at this task, it's not what they were designed for. It's a pressing problem, especially given NASA's focus on Mars. If one were to fail it could jeopardize ongoing missions. Consider: If the rovers had to transmit data directly from Mars' surface to Earth, that would slow down data transmission rates by up to 4,000 times because the rovers are outfitted with much smaller antennas and solar arrays than the orbiters.

In space, as on Earth, building infrastructure just isn't as exciting as building cool cars and fast ships. But doing it now—sending out communications nodes to form up the network as soon as possible—would set the table for much more dynamic exploration of the solar system. Placing a dedicated telecom orbiter around the Red Planet could greatly reduce data transmission times for all future Mars missions.

Now, NASA could save money here by piggybacking on old tech instead of building new. The beauty of the interplanetary internet is that it can be constructed on the corpses of past scientific missions. In many cases, when an orbiter is finished with its primary scientific mission, it is possible to turn it into an interplanetary internet node simply by uploading BP software. Perhaps, long after its scientific mission is complete, the Mars Reconnaissance Orbiter will become another node of the space internet.

But here's the problem with building the network out of old spacecraft: You don't get to take advantage of increasingly sophisticated optical communication technologies (think lasers), or improvements to BP such as bundle streaming service. Until new orbiters begin to be outfitted with state-of-the-art equipment that allow them to take advantage of laser-based communication and bundle streaming protocol—or telecommunications orbiters are launched specifically for that purpose—realizing data-heavy uses like, say, Netflix in space might be far-fetched. But it's not impossible.

Let's start with laser-based communications. Back in 2013, NASA shattered its communication speed records during a 30-day mission called the Lunar Laser Communication Demonstration (LLCD).Talking to NASA via laser, the Lunar Atmosphere Dust Environment Explorer orbiter uploaded and downloaded data from a rover on the moon's surface at a rate of 622 kbps and 20 kbps, respectively. To put that in perspective, theaverage internet connection speed in the US is about 12,000 mbps and the download speed needed to stream Netflix in HD is about 500 mbps.

Clearly, NASA has made huge strides in showing that optical communication (aka lasers) hold the potential for a huge expansion of broadband in space. And more broadband means an increased capacity for scientific missions, ranging from establishing a colony on the moon or sending a submarine to explore the methane oceans on Titan, Saturn's largest moon. As Cerf put it, "the next big deal is optical communication."

Then there's the Jet Propulsion Lab's bundle streaming service. The idea here is that delay tolerant networking could do more than simple bulk data transfers from spacecraft: It could support multimedia applications such as streaming live or stored video, so that the future citizens of Mars could send videos back to their loved ones (like those you see in Interstellar) or maybe just download the newest episode of Game of Thrones.

Across the Universe

Establishing an interplanetary network is key to expanding humanity's presence in the solar system. With a number of human exploration missions to the Moon and Mars on the books for the coming decades, it is likely that the status of DTN-based communication technologies in space will rapidly evolve from an interesting, albeit esoteric, experiment to a critical part of future missions. The Bundle Protocol underlying the idea has already been successfully tested, bringing the internet to everyone from isolated Arctic reindeer herders to disaster relief workers to astronauts in orbit. The problem remains convincing others that developing the interplanetary net is a worthwhile venture that is intimately bound up with the quality and quantity of future exploration in the solar system.

"It's truly stunning to me that the space communications part of NASA has not tried to get this done as quickly as possible," Vint Cerf says. "It's amazing how slow some people seem to be in recognizing the utility of these sorts of things. Someone is going to have to say: enough is enough, let's make DTN more available. I don't know who it's going to be, but if I were in charge of NASA, that would be a priority."

Yet in order to shape our extraterrestrial future, it is often necessary to turn to the past. Farrell's experiment with ferrying data to reindeer herders is now five years distant, but the lessons learned during those weeks in the Arctic have proved to be prescient for more recent developments in the struggle to take the internet to space.

For Farrell, one of the most important takeaways from his sojourn north of the 66° parallel was the importance of considering how to integrate user applications into the DTN architecture. In the case of the reindeer herders, one of their primary concerns was pretty mundane: they wanted the ability to access their bank accounts at will. Yet this proved to be a tall order for Farrell and his groups because by the end of the experiments, the banks still hadn't found a way to integrate DTN architecture into their systems.

As Farrell noted, this is a hard won lesson that could prove to be useful for those tasked with making the interplanetary 'net a reality. If you want to take the internet to space, it's critical for mission scientists to understand the applications that can be run using the interplanetary internet architecture, and how that can benefit the mission. This could range from mission necessities such as increasing the rate and quality of data transmission to helping the first Martians adjust to their isolation by providing terrestrial comforts such as streaming video connections.

"If I was trying to get the interplanetary 'net architecture more widely deployed, I would concentrate on trying to figure out what the mission scientists want from their applications to see what kind of payloads DTN would make sense with," said Farrell. "The presumption to start with is if we get DTN features into the spacecraft, then there are benefits for the mission operation folks and the scientists. The question is how to make it compelling for them."

Although Cerf remains mystified that the interplanetary 'net is not more of a priority for space mission scientists, perhaps the main struggle in building this interplanetary telecommunications network is communication between relevant actors on Earth. The technology and will to make the interplanetary internet a reality are there; the only thing that still needs to be done is to show missions scientists how it will be conducive to their scientific efforts.

So then, should the first Martians expect to be watching Netflix on the Red Planet? Well, that largely depends on whether Cerf, Farrell, Scott and the dozens of other researchers around the globe working on building the interplanetary internet are able to convince highly risk-averse mission scientists that it is a worthwhile investment. It will be an uphill battle, but if the rapid development of the interplanetary 'net in the last few years is any indication, it'd probably be a good idea to pack some popcorn for the journey.